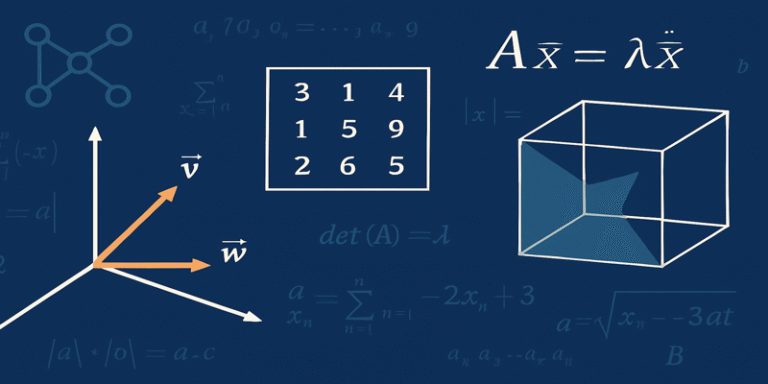

In a world increasingly driven by technology, Linear Algebra has emerged as a fundamental discipline underpinning many of the innovations that shape modern life. From machine learning algorithms and quantum mechanics to computer graphics and robotics, the applications of linear algebra are not only vast but essential. While often introduced in secondary school as matrix operations or systems of linear equations, linear algebra is, at its heart, a powerful mathematical language for understanding multidimensional relationships in a concise and structured way.

What is Linear Algebra?

Linear algebra is the branch of mathematics concerned with vector spaces, linear transformations, and the operations on vectors and matrices that preserve linearity. In simpler terms, it allows for the manipulation and interpretation of data organised in straight-line relationships. This simplicity in structure, however, belies the complexity and depth of the subject when extended to real-world applications.

A vector can be thought of as a quantity with both magnitude and direction, and a matrix as a grid of numbers that can represent complex systems of equations or transformations in space. A linear transformation is a function that maps vectors to other vectors in a manner that preserves vector addition and scalar multiplication.

The discipline’s utility becomes clearer when one considers the types of problems it can solve. For example, finding the shortest route in GPS navigation, facial recognition in photos, or predicting financial trends—all of these rely heavily on linear algebraic computations.

Applications in Computer Science and Engineering

One of the most prominent modern applications of linear algebra is in computer graphics. Every image on a screen is ultimately composed of pixels, each representing a point in space. Transforming, rotating, scaling, or even rendering a three-dimensional object on a two-dimensional screen relies on the mathematics of matrices and vectors (Strang, 2016).

In machine learning, algorithms like principal component analysis (PCA), support vector machines, and deep neural networks depend on linear algebra to process, reduce, and interpret high-dimensional datasets. The ability to represent large systems of relationships compactly allows machines to “learn” patterns and make predictions. According to Goodfellow, Bengio, and Courville (2016), “linear algebra is the core of many algorithms in machine learning due to the natural representation of data as vectors and matrices”.

Engineering disciplines also rely heavily on linear algebra. Structural engineers, for instance, use matrix analysis to predict how forces will affect a structure, ensuring safety and stability. Electrical engineers employ vector spaces and transformations to model circuit behaviour or signal processing (Lay, Lay & McDonald, 2016).

Theoretical Foundations and Education

Beyond its practical use, linear algebra is critical in developing abstract reasoning. As Clark et al. (2019) assert, studying the proofs and logical structures in linear algebra sharpens the mind for more advanced areas of mathematics. Proof techniques developed through studying vector spaces or the rank-nullity theorem equip students with a way of thinking that transcends the specifics of any one problem.

Understanding linear algebra is also a gateway to other branches of mathematics such as functional analysis, differential equations, and topology. It plays a foundational role in nearly all advanced mathematical reasoning.

Key Concepts in Linear Algebra

To appreciate its power, it is important to understand some core concepts:

- Vector Spaces: These are sets of vectors that can be added together and scaled by numbers (scalars). A vector space must satisfy certain axioms such as closure, associativity, and distributivity.

- Matrices and Determinants: A matrix represents a system of linear equations or a linear transformation. The determinant of a matrix provides information about the system, such as whether it has a unique solution.

- Eigenvalues and Eigenvectors: These are fundamental in understanding how transformations change space. They are heavily used in physics, dynamics, and data analysis.

- Orthogonality and Projections: These concepts relate to how vectors relate to each other in space, and are crucial in techniques like least squares approximations, used in data fitting.

Importance in Physics and Natural Sciences

In physics, especially in quantum mechanics, the state of a system is often described using vectors in a complex vector space called a Hilbert space. Operators, which represent observable quantities like energy or momentum, are linear transformations on these vectors. According to Griffiths (2018), understanding linear algebra is crucial for students aiming to grasp the fundamentals of quantum theory.

Even in biology and chemistry, linear algebra has found its place. Bioinformatics uses it to process large-scale genomic data, while chemistry employs it in the analysis of molecular orbitals and reaction networks.

Historical Development

The roots of linear algebra can be traced back to ancient China, where methods resembling modern matrix operations were used to solve systems of equations, as recorded in the “Nine Chapters on the Mathematical Art” (Boyer & Merzbach, 2011). However, the modern formulation took shape in the 19th century with the work of mathematicians such as Arthur Cayley, Hermann Grassmann, and James Joseph Sylvester, who formalised matrix algebra and abstract vector spaces.

Challenges and Common Misconceptions

Despite its importance, many students find linear algebra challenging. The shift from arithmetic or calculus-based mathematics to abstract algebraic structures can be disorienting. One major hurdle is the need to think in higher dimensions, which is not always intuitive.

Additionally, students often focus on rote matrix manipulation without grasping the underlying geometric interpretation. As Gilbert Strang (2016) notes in his widely used textbook, teaching linear algebra effectively requires showing both the algebraic and geometric perspectives, to help students develop a holistic understanding.

Linear algebra is not just another subject in the mathematical toolkit—it is the language of systems, the architecture of transformations, and the foundation of modern computation. Its applications span every corner of science and engineering, while also nurturing essential cognitive skills such as logical reasoning and abstract thinking. As our world becomes increasingly data-driven, the ability to model and interpret complex, multidimensional systems is more important than ever.

As such, learning linear algebra is not simply an academic exercise—it is a gateway to understanding and shaping the future.

References

Boyer, C. B., & Merzbach, U. C. (2011). A History of Mathematics (3rd ed.). Wiley.

Clark, D., Ryan, L., & Studham, S. (2019). Bridging the Gap: Making Abstract Mathematics Accessible through Linear Algebra. Journal of Mathematics Education, 23(2), 45–60.

Goodfellow, I., Bengio, Y., & Courville, A. (2016). Deep Learning. MIT Press. Available at: https://www.deeplearningbook.org/

Griffiths, D. J. (2018). Introduction to Quantum Mechanics (3rd ed.). Cambridge University Press.

Lay, D. C., Lay, S. R., & McDonald, J. J. (2016). Linear Algebra and Its Applications (5th ed.). Pearson.

Strang, G. (2016). Introduction to Linear Algebra (5th ed.). Wellesley-Cambridge Press.