Nanoelectronics: Applications of Nanotechnology in Modern Devices

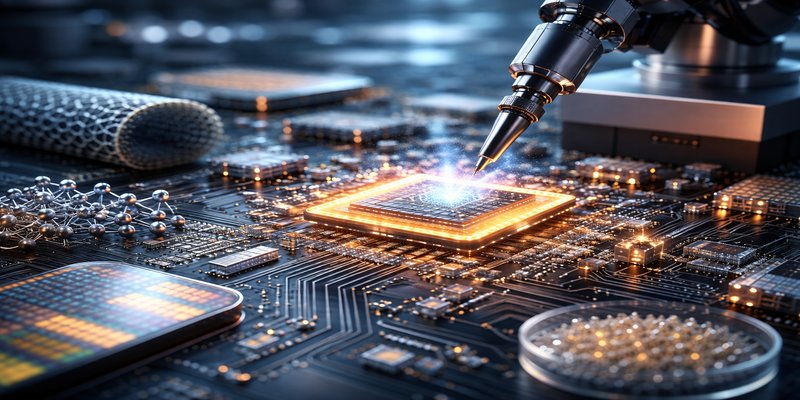

Nanoelectronics refers to the application of nanotechnology within the field of electronics. In the modern world, electronics form the backbone of communication, industry and daily life. From smartphones and medical devices to satellites and electric vehicles, electronic systems drive technological progress. At the heart of this transformation lies nanotechnology—the science of manipulating materials at dimensions between 1 and 100 nanometres. At this scale, materials exhibit distinctive electrical, optical and mechanical properties that enable devices to become smaller, faster and more efficient (Hornyak et al., 2018). The continuous evolution of electronics depends heavily on nanoscale engineering, particularly in the development of semiconductors, transistors and advanced materials. Without nanotechnology, the miniaturisation and performance gains that define the digital age would not be possible. This article explores how nanotechnology is applied in electronics, highlighting key innovations, practical examples and emerging trends, while considering technical and ethical implications. 1.0 The Foundations of Nanoelectronics 1.1 Miniaturisation and Integrated Circuits One of the most significant applications of nanotechnology in electronics is the ongoing miniaturisation of components. Modern integrated circuits contain billions of transistors packed onto silicon chips no larger than a fingernail. This level of density is achieved through nanoscale fabrication techniques. Hornyak et al. (2018) explain that when electronic components are reduced to the nanometre scale, their behaviour is influenced by quantum mechanical effects and increased surface area-to-volume ratios. These phenomena allow engineers to design transistors that switch faster and consume less energy. For example, contemporary microprocessors are manufactured using fabrication nodes measured in single-digit nanometres. Such precision enables high-speed computing in smartphones, laptops and data centres, supporting everything from social media platforms to cloud computing infrastructure. 1.2 Advanced Lithography Techniques The fabrication of nanoscale circuits relies on sophisticated processes such as extreme ultraviolet (EUV) lithography. This technique uses very short wavelengths of light to etch intricate patterns onto semiconductor wafers. Without nanoscale lithography, modern microchips would be physically impossible to produce (Hornyak et al., 2018). This technology demonstrates how nanotechnology directly supports the growth of the global electronics industry, enabling compact and energy-efficient devices that define contemporary consumer culture. 2.0 Nanomaterials in Electronic Devices 2.1 Carbon Nanotubes Carbon nanotubes (CNTs) are cylindrical nanostructures composed of carbon atoms arranged in a hexagonal lattice. They possess exceptional electrical conductivity, tensile strength and thermal stability. According to Allhoff, Lin and Moore (2009), CNTs have the potential to replace silicon in certain transistor applications due to their superior electron mobility. Researchers have already demonstrated carbon nanotube transistors that operate efficiently at nanoscale dimensions. In practical terms, CNT-based components may lead to faster processors, flexible electronic displays and more durable wearable devices. 2.2 Graphene and Two-Dimensional Materials Another revolutionary material is graphene, a single layer of carbon atoms arranged in a two-dimensional structure. Graphene exhibits extraordinary electrical conductivity and mechanical flexibility, making it ideal for next-generation electronics. The European Commission’s Graphene Flagship initiative (European Commission, 2023) highlights graphene’s potential in high-frequency transistors, sensors and transparent conductive films. Flexible smartphones and foldable displays rely on such nanomaterials to combine durability with performance. Beyond graphene, other two-dimensional materials such as molybdenum disulphide (MoS₂) are being explored for nanoelectronic applications, offering alternatives as silicon approaches its physical limits. 3.0 Energy Efficiency and Thermal Management As electronic devices become more powerful, managing heat dissipation and energy consumption becomes increasingly important. Nanoscale engineering provides solutions to these challenges. 3.1 FinFET and 3D Transistors Modern processors use FinFET (Fin Field-Effect Transistor) technology, in which the transistor channel is raised above the substrate in a three-dimensional structure. This nanoscale design improves control over electrical current and reduces leakage, enhancing energy efficiency. The result is longer battery life in portable devices and reduced electricity demand in data centres. According to the International Energy Agency (IEA, 2022), improvements in semiconductor efficiency contribute significantly to lowering the environmental impact of digital infrastructure. 3.2 Nanomaterials for Heat Control Nanomaterials such as graphene and carbon nanotubes also improve thermal conductivity, allowing heat to dissipate more effectively from electronic components. Efficient heat management ensures device reliability and prolongs lifespan. For instance, nano-enhanced thermal interface materials are used in high-performance computing systems to maintain stable operating temperatures. 4.0 Nanoelectronics in Consumer Technology Nanotechnology directly influences everyday consumer products. Smartphones, smart watches and tablets depend on nanoscale processors and memory chips. 4.1 Memory and Data Storage Advanced memory technologies such as flash memory and emerging memristor-based systems rely on nanoscale structures to store information more densely. Smaller memory cells increase storage capacity while reducing physical device size. This capability supports the growing demand for data-intensive applications such as streaming services, artificial intelligence and cloud storage. 4.2 Displays and Optical Electronics Nanotechnology enhances display technology through the use of quantum dots—semiconductor nanoparticles that emit precise wavelengths of light depending on their size. Quantum dot displays offer brighter colours, improved contrast and greater energy efficiency compared to traditional screens (Hornyak et al., 2018). These innovations illustrate how nanoscale science translates into tangible improvements in consumer experience. 5.0 Emerging Frontiers in Electronics 5.1 Flexible and Wearable Electronics The integration of nanomaterials into flexible substrates has enabled the development of wearable electronics and flexible sensors. Such devices are increasingly used in healthcare monitoring, sports performance analysis and environmental sensing. Graphene-based sensors, for example, can detect minute biological signals, offering applications in medical diagnostics. 5.2 Quantum and Molecular Electronics As traditional silicon scaling approaches physical limitations, researchers are exploring quantum electronics and molecular-scale devices. These systems rely on nanoscale fabrication to control electron behaviour with extraordinary precision. The National Institute of Standards and Technology (NIST, 2023) notes that quantum devices may revolutionise computing, communication and cryptography in the coming decades. Ethical and Environmental Considerations While nanoelectronics offer remarkable benefits, they also present challenges. The rapid turnover of electronic devices contributes to electronic waste (e-waste), raising concerns about sustainability and resource consumption. Moreover, the manufacturing of nanoscale components requires significant energy and specialised materials. Responsible innovation demands environmentally conscious design and recycling systems (Allhoff, Lin and Moore, 2009). There are also concerns regarding data privacy and surveillance, as increasingly … Read more